About

me

I acquired a BS in Comprehensive Kinesiological Sciences from the University of Nevada, Las Vegas. I have a diverse background in anatomy, physiology, and biomechanics I began attending the MS program at the University of Idaho in 2022. I will be completing my MS in Experimental Psychology with Emphasis in Human Factors at the University of Idaho in May 2023. I will be receiving a certificate as an Associate Human Factors Professional at the completion of my program. Throughout my master’s program at the University of Idaho, I have gained knowledge about engineering and psychology in association with human factors principles in addition to the research side of the field. I would love to pursue a career that gives me the opportunity to expand my skill set while also allowing me to partake in projects that utilize my background in conjunction with my new proficiency in Human Factors.

UX/UI Research Internship

Overview

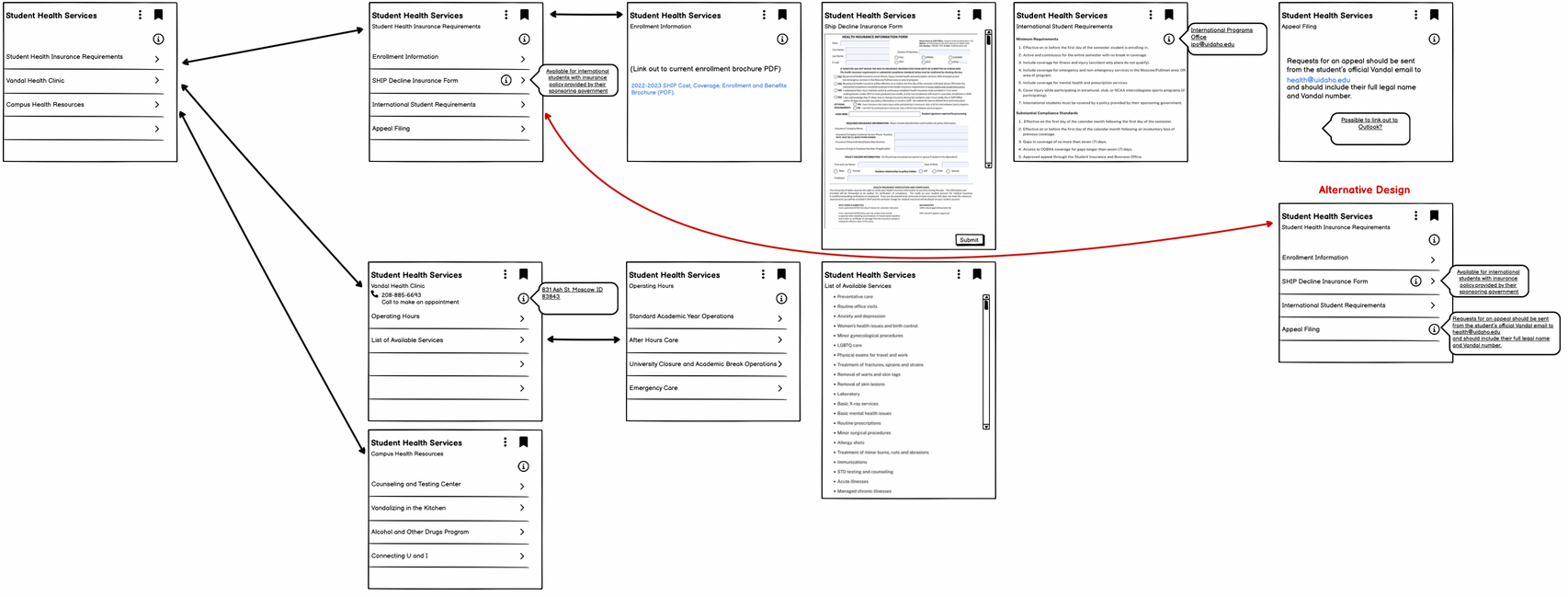

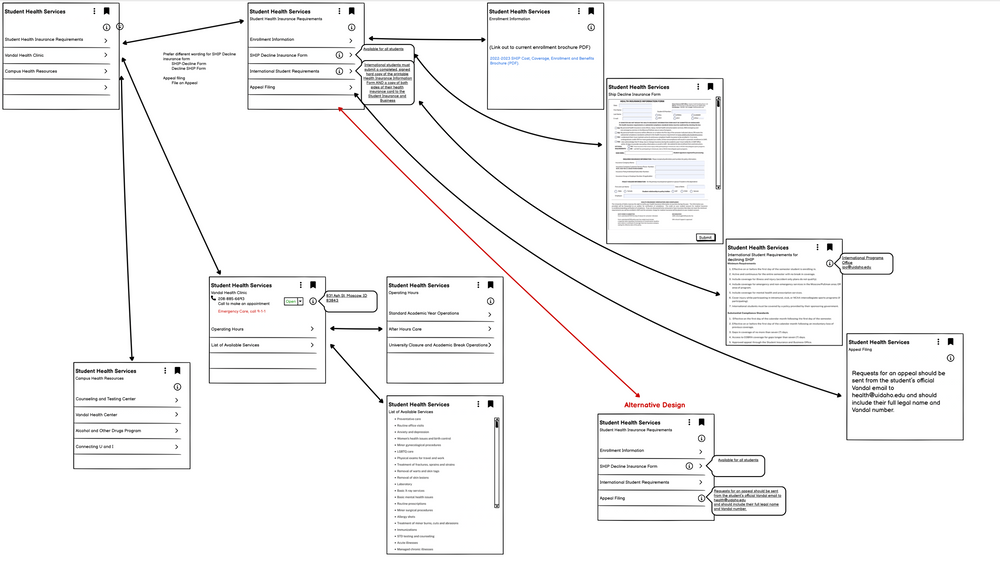

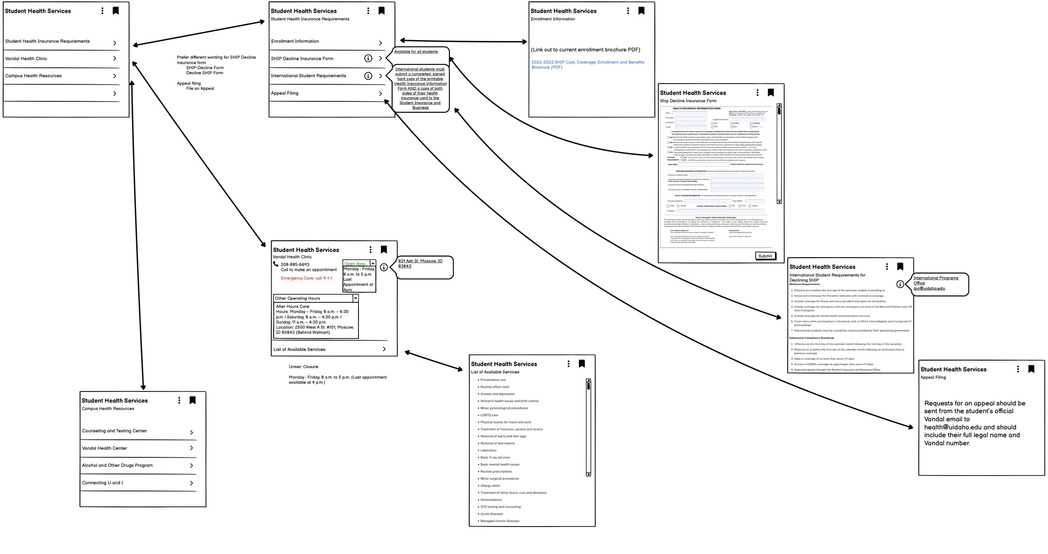

Since January 2023, I have been working alongside a cross-functional team that includes a UX research consultant, software engineer, etc. This team's primary task is to design and implement a new digital system, called MyUI, for University of Idaho students, faculty, and staff; the goal is to enhance their experience and merge the excess of current systems. My tasks have included wire-framing and prototyping the student health services card as well as initiating research with important stakeholders to collaborate on current pain points and potential design opportunities. The approach we use focuses on user-centered design that is supported by research and end-user data.

at the start

When I first started, I was debriefed on all systems/programs currently being used by the team to complete their task. These include the Ellucian Experience platform, Adobe XD for prototyping, Balsamiq for wireframing, and Miro for project organization. Following the debrief I was given my first task to conduct initial research on the student health services information followed by the completion of a low-fidelity wireframe to present in the ideation session. To complete this task, I accessed the student health services website on the university website that students must access for information. I gathered all the necessary information and organized it into proper groups. Using Balsamiq, I created the first low-fidelity wireframe pictured below.

During the ideation session with all members of the team, I presented my research findings as well as the wireframe; the session was meant to induce collaboration and idea-building on how to better the wireframe and improve the presentation of information. Before initiating more research with stakeholders, we had two more ideation sessions that lead to two more iterative designs of the wireframe that are pictured below.

The next step in the design process was to meet with the relevant stakeholders involved with student health services to get their feedback on my wireframe as well as the necessary information for students. The meeting included me, the team, and five stakeholders. We started the meeting by giving an overview of MyUI and the current research that has been conducted. This was followed up by the stakeholder's thoughts on current pain points they run into and their ideas for change. Finally, I presented the updated wireframe in detail; their questions and ideas as we went were noted and used later in another ideation session.

future

As of current, I am preparing to start on the high-fidelity prototype, using the wireframe and the new information from the stakeholders. The next step in the iterative design process is to complete the prototype and test it with end users during what is called a feedback session. Qualitative data will be collected during this session to further develop the prototype. Furthermore, usability tests are conducted once the high-fidelity prototype is complete. This is where quantitative data is collected and used to finalize the design before moving it onto the development team.

Overview

This Pilot Study has been conducted under the supervision of Dr. Barton at the University of Idaho in the Palouse Injury Research Lab; this was a two-person research team and my lab partner throughout the process was a fellow graduate student, Kyle Banzon. We used our knowledge of cognitive psychology and research methods to design a thorough study using human subjects. The purpose of this study was to use an ABAB design to answer two research questions; The goal of the first research question was to assess the impact errors in automation have on a user’s trust. Kyle’s portion was an investigation into the extent to which parallel processing aids in distributing the human cognitive load.

Methods

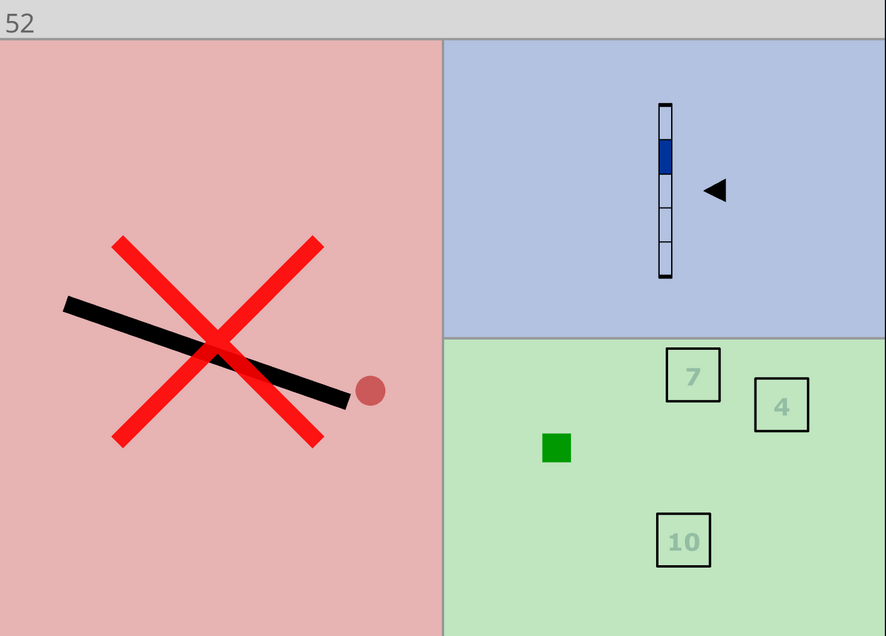

The design of this study was built to encompass both research questions. We used a multitasking game (pictured below) that adds a new visuospatial task at regular intervals. This makes the game more difficult for the participant as time goes on. The time in seconds was used to assess participant performance on a continuous scale; a higher time can be associated with higher performance. The timing in which new tasks were introduced included 15 seconds, 40 seconds, and 80 seconds, being a total of four games. If the participant lasted longer than 120 seconds, the difficulty of the four games would increase. My portion of the study had four trials, as expressed by the ABAB research design, and automation was induced during trial 2 and trial 4. The other two trials were controlled only by the participant. The point was to induce the new system of automation in trial two, withdraw the treatment, and reintroduce the automation, this time with errors. The participants were not briefed on the error of automation before beginning the study.

results

Following trial 2 and trial 4, I gave each participant a trust-in automation questionnaire to fill out. The first questionnaire gave me the participant’s baseline score for their trust in automation; the second questionnaire gave me their change in the level of trust following the induced error in automation. After completion of the study, a paired-sample t-test was conducted to compare the trial 2 questionnaire score to the trial 4 questionnaire score. If the hypothesis was correct, the automation error would decrease their trust in the automation, therefore, decreasing their total score. The data indicated there was a significant difference between the means with a 95% confidence interval and an effect size of 98%. I was able to reject the null hypothesis that we will observe no change in trust in automation.

summary

The purpose of this assignment was to evaluate a product against a related standard of choice. The goal was to gain relevant skills in evaluation analysis as well as knowledge of the diversity of industry standards. The product I chose to evaluate was the HDT Storm Search and Rescue Tactical Vehicle (SRTV) against MIL-STD-1472-G. This is a common military design standard meant to provide conditions for the design and development of military products such as vehicles, systems, etc.

The HDT Storm SRTV was designed for the United States Air Force; The design choices and tools that make up this vehicle were intended for multi-purpose use and allow the vehicle to take on many challenges on and off the ground. The vehicle information found was very limited due to the classified nature of the vehicle. Using the information I could gather, I was able to search using keywords that helped me acquire the necessary standard number and description that was associated. I grouped the standards into general requirements and detailed requirements. For the assessment table, I included the standard number, name, description, compliance outcome, and reasoning. In total, I evaluated the HDT Storm SRTV against 10 design standards of which 50% were compliant, 30% had exceptions, and 20% were not compliant. Although the vehicle was majority compliant with MIL-STD-1472-G, there are many other military standards that it may not be compliant with that can be assessed in the future.

purpose

The purpose of this project was to introduce us to the user-centered design process. To begin, we were asked to identify an interface to re-design and the reason for re-designing it. I chose to re-design the Apple CarPlay interface to reduce driver distraction. Before redesigning, I addressed existing solutions to decrease driver distraction and identified pain points that I could define further in qualitative research. Also, I identified the stakeholders that would be involved in this redesign; since the redesign was for learning purposes, we did not collaborate with all stakeholders, just the users.

research

I started by conducting a Qualtrics survey and received 11 total participants. I began with a group assignment question “Have you ever used Apple CarPlay in a vehicle?” Some qualitative data I gathered were likes and dislikes, desired apps, tactile feedback preferences, and potential feature ideas. I also collected some user reviews on the best Apple apps and third-party apps in CarPlay. To conclude the beginning stages, I established initial functional and non-functional requirements for re-design.

prototyping

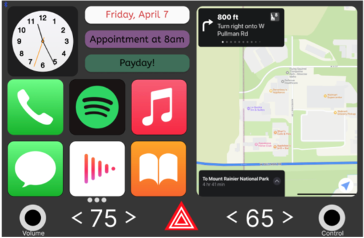

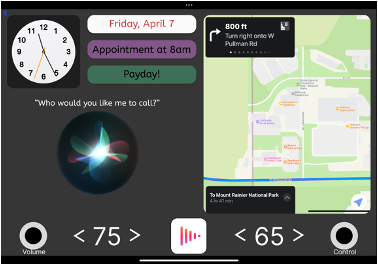

The first high-fidelity working prototype was designed in Figma following a low-fidelity wireframe of a potential layout. I designed a driver view and a passenger view because although I am designing to decrease driver distraction, I didn’t want to limit a potential passenger. The views looked the same, however, the passenger view offered a swiping menu designated with three dots to access more apps; this can be seen below.

iterative design

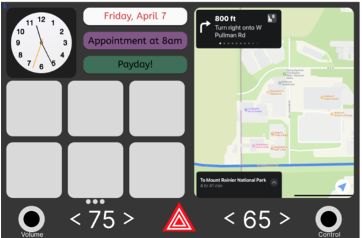

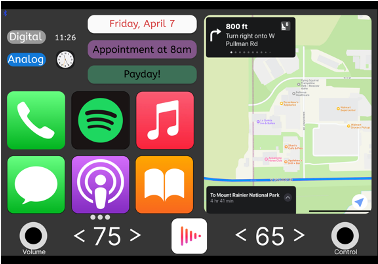

Following the initial prototype, I conducted usability tests with five participants. I was testing their effectiveness and efficiency with the prototype as well as gathering subjective feedback using the talk-aloud method. I found some errors occurred due to the reality of the prototype, but otherwise, the users’ mental model was accurate. Some subjective feedback I received included the need for a choice in clock representation and that Siri needs to give the user feedback on which app they are accessing. Following the usability tests, I refined my requirements and a new iteration of my prototype which can be seen below. Following the second iteration of my prototype, I offered ways that another potential iterative design might benefit. The control knob used to access apps within my prototype is far away from the driver, therefore, a remapping of the control would be helpful to a driver. Lastly, attempt to offer a way to customize the number of apps available to the driver if they do not require the use of all six. Overall, this project helped me gain many new and relevant skills including wireframing, prototyping, and usability testing as well as offered a way to apply my skills in research.

purpose

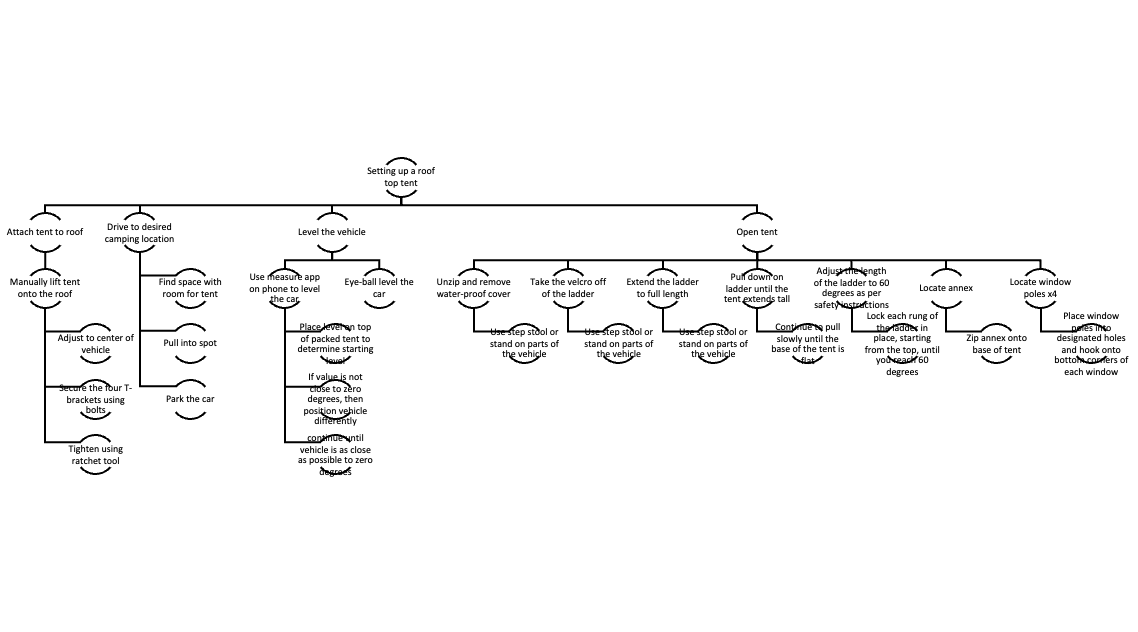

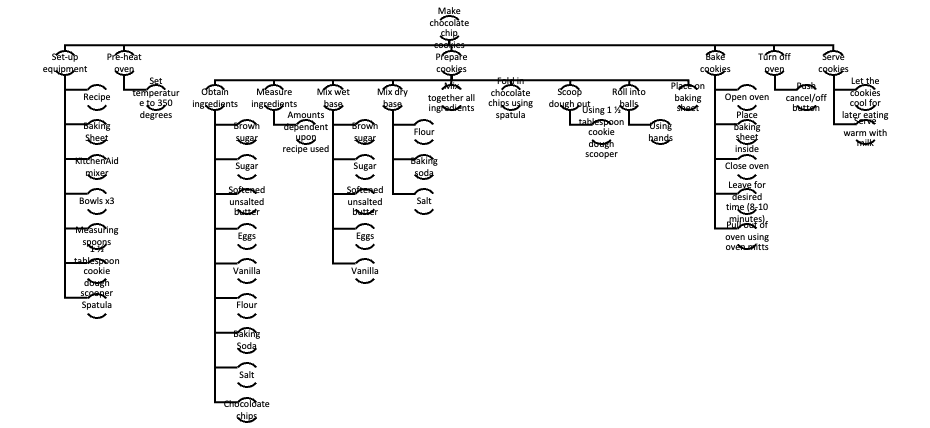

The goal of this assignment was to perform two hierarchical task analyses; one of the tasks was supposed to be centered around simple controls and displays, and the other was supposed to be outside controls and displays. The first task was constrained to a simple system or piece of equipment while the second was to be more broader in nature that is not limited to technology. For the first task, I chose baking cookies in a simple oven and for the second task, I chose setting up a rooftop tent. By completing the hierarchical task analyses, I was able to identify areas within each task that may have the potential for error, unintentional injury, increased cognitive load, etc.

task 1

For baking cookies in an oven, I identified six subtasks involved: setting up the necessary equipment, pre-heating the oven, preparing the cookies, baking the cookies, turning off the oven, and serving the cookies. I further identified subtasks within each of these and addressed important areas of interest concerning human factors and ergonomics. Visual perception, mental model, speed-accuracy trade-off, risk-benefit trade-off, and unintentional injury were the main topics addressed. A visual of this first analysis chart is shown below.

task 2

Furthermore, I identified four subtasks within setting up a rooftop tent: attaching the tent to the roof, driving to the desired camping location, leveling the vehicle, and opening the tent. The subtasks within these required more detailed steps and could cause harm, therefore, many areas of interest regarding human factors and ergonomics were addressed. Potential injury to the spine, head, or other body parts due to incorrect lifting posture or negligence could occur during the attachment subtask. The risk-benefit trade-off also plays a large part when it comes to the number of people lifting the tent. The fewer people, the riskier the behavior. Visual perception and top-down processing are necessary when assessing a space to open the tent. When it comes to leveling the vehicle, a user may choose to employ the use of the measure app on their phone. The app adheres to the Principle of the Moving Part and follows the same mental model as the physical tool. Finally, opening the tent requires many steps which could exceed a user’s cognitive capacity. A visual of this second analysis chart is shown below.